This blog originally ran on the Antmicro website. For more Zephyr development tips and articles, please visit their blog.

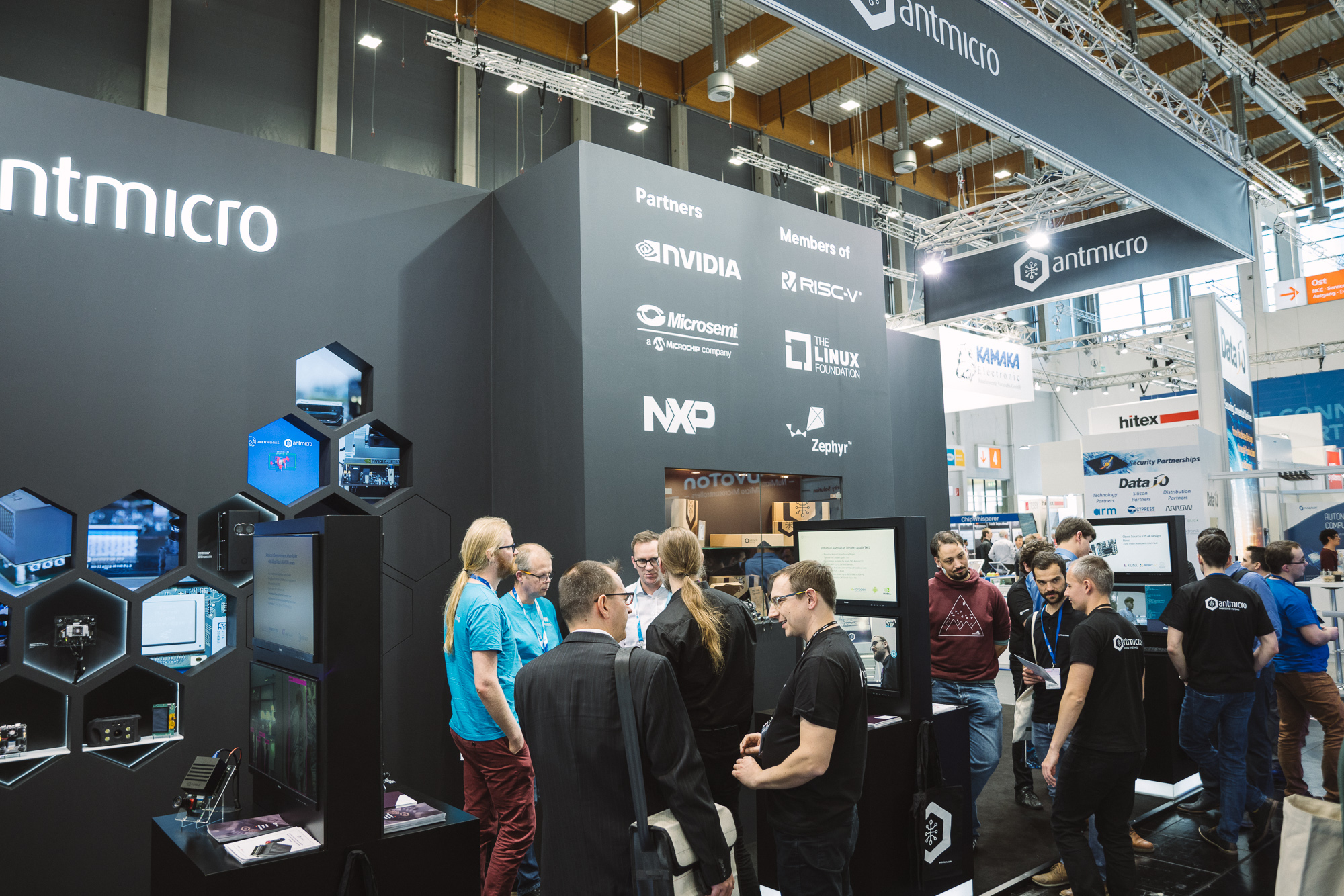

Zephyr Project member Antmicro helps its customers build advanced, modular, software-centric, edge to cloud AI systems backed by a sound, open-source-driven methodology. With so much momentum in open digital design happening not only in the US but globally, we’re not wasting any second of it; and traditionally, the forthcoming Embedded World 2020 in Nuremberg, Germany, between February 25-27, provides a great opportunity to meet with European partners, customers and like-minded people interested in our technologies.

Visit Antmicro’s slick black booth located, as last year, in Hall 4A (#4A-621) for a multitude of technology demonstrators on computer/machine vision, FPGA SoCs, edge to cloud AI systems, open hardware and software development, open tooling as well as new design methodologies with Renode simulation and rapid-turnaround chiplet-based ASICs.

New (and open) FPGA/ASIC development workflows

Over the years Antmicro has been at the forefront of the FPGA SoC technology, designing effective high-speed signal platforms for edge computing (especially in CV/MV multi-camera applications). Coupled with end-to-end services of writing FPGA IP, AI/camera processing, BSPs and drivers (Linux, Zephyr), we are uniquely positioned to leverage open FPGA development workflows, tools and languages such as Chisel and Migen.

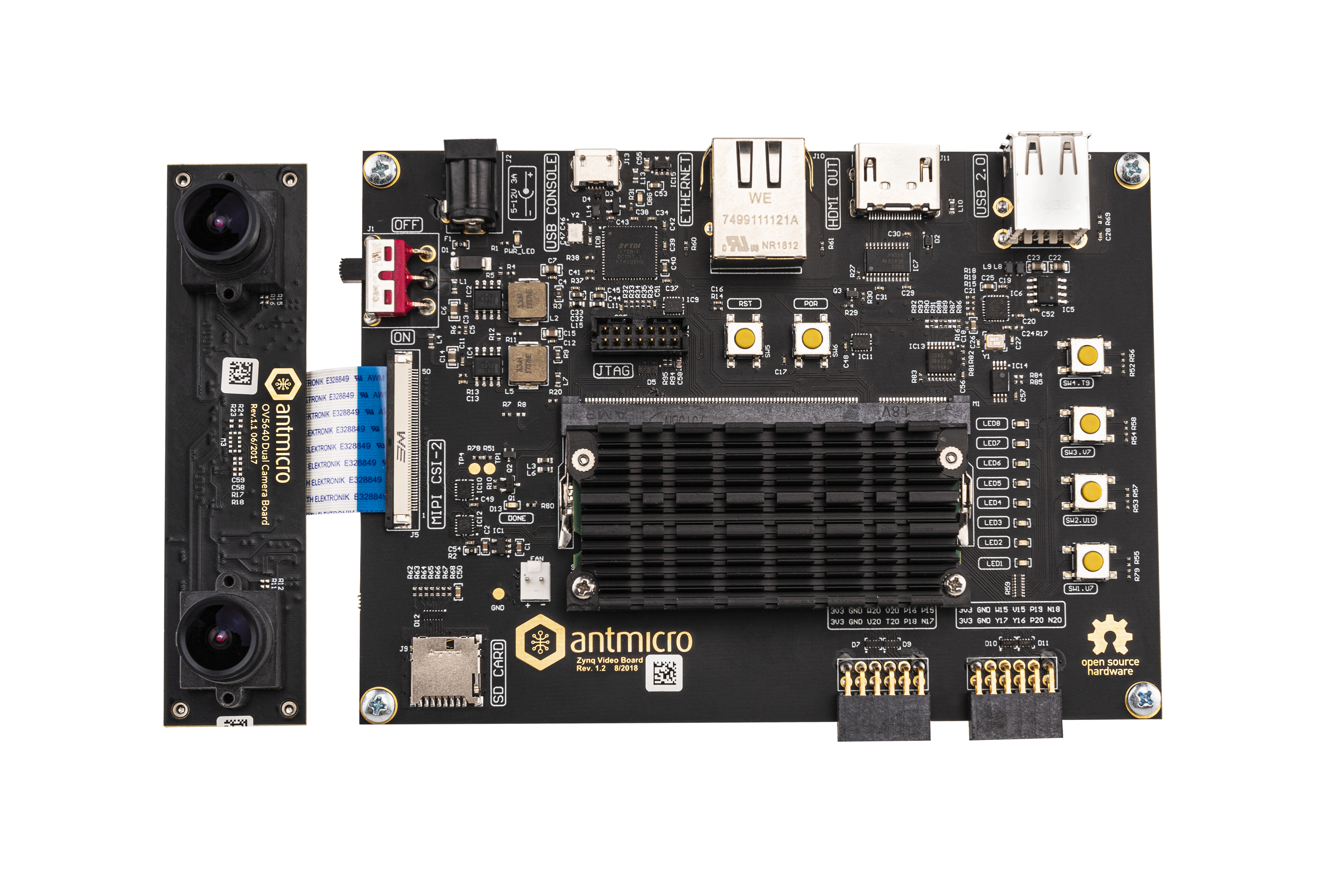

Antmicro’s Zynq Video Board is a smart piece of open hardware for image processing with an open FPGA MIPI CSI-2 IP core for grabbing video streams. Fully open source, the board features HDMI, Ethernet, and SD card support, and will use Xilinx Zynq (or other Enclustra FPGA SoMs) to allow interfacing up to two 2-lane MIPI CSI-2 cameras. Fittingly, the camera sensors are controlled by Zephyr RTOS (see and learn more at booth #4-170) running on a VexRiscV RISC-V softcore written in LiteX, a likewise open source SoC generator.

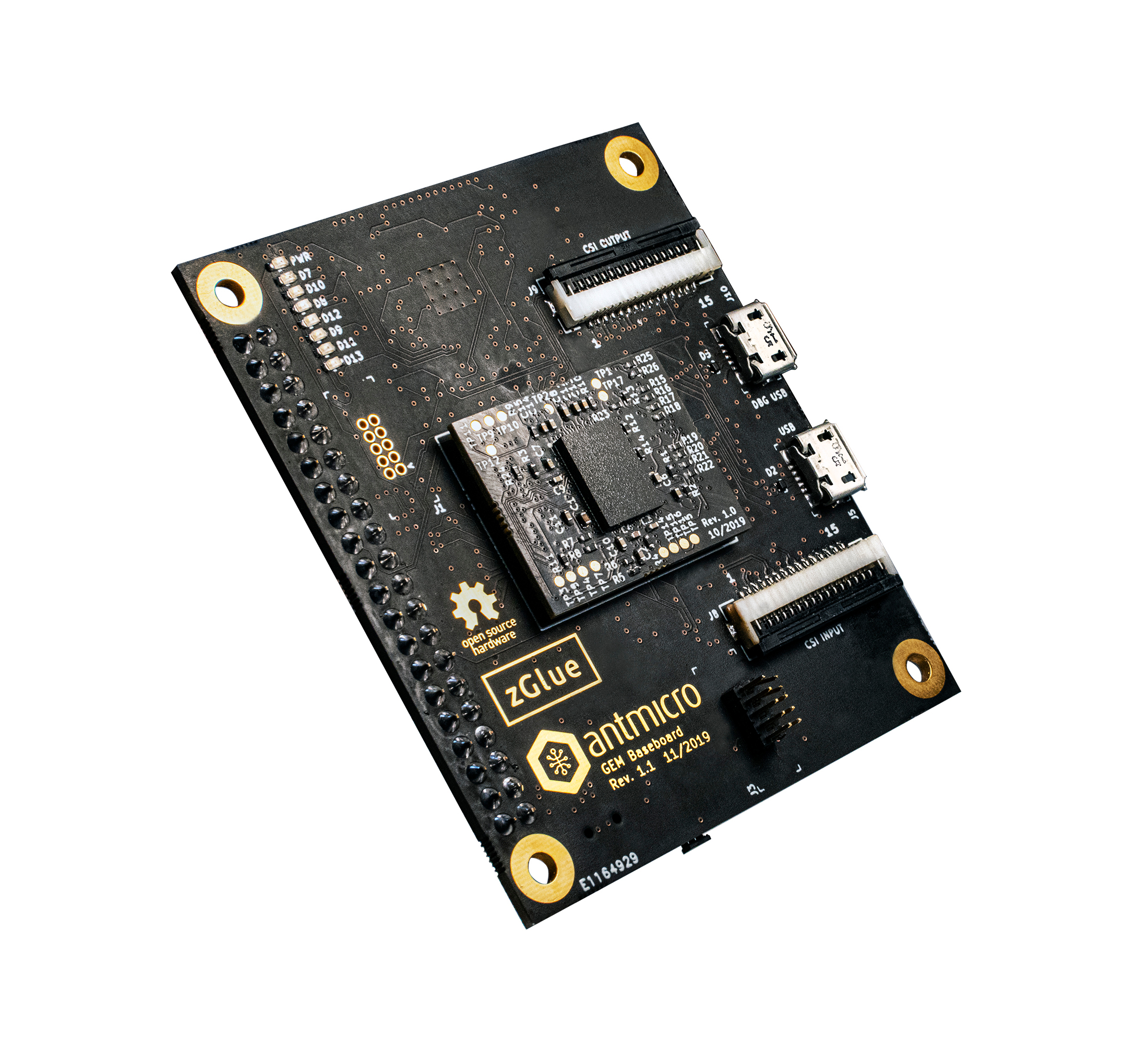

What is perhaps even more exciting, at the recent RISC-V Summit Antmicro unveiled its rapid turnaround chiplet-based ASIC series, GEM. Utilizing zGlue’s smart ASIC SiP development tech (#4A-364), Antmicro engineers are now capable of creating full-blown custom hardware within a practical, quick-prototyping / quick-tape-out process, employing all the benefits of modern open digital design. In Nuremberg, we will be showing the GEM series in the context of our OSHW development services (baseboard, modules), BSP development (GEM running Zephyr on a Raven RISC-V soft CPU), FPGA development (GEM built around two Lattice iCE40 FPGAs) and AI development (live video analysis in Lattice iCE40 with a neat MIPI CSI-2 switch for on-the-fly re-routing to the camera), demonstrating a complete overview of our design approach.

Advanced edge AI systems

Thanks to innovative edge computing platforms (be it GPGPU-, FPGA- or heterogeneous CPU-based) and custom ASIC accelerators, Deep Learning has been making its way to new use cases, with Antmicro’s in-depth competence spanning all of these areas.

An outstandingly successful use case in the civil defence area has been that of the SkyWall Auto, a world-first automatic counter-drone system for which Antmicro has developed the identification and tracking module. SkyWall Auto has already proven its ability to physically capture drones in front of US military and government agencies during recent high-profile tests, successfully engaging multi-rotor and fixed-wing targets during a range of scenarios. This solution, based on specially trained neural networks to enable AI-driven capture of trespassing drones, will be another highlight of our booth this year.

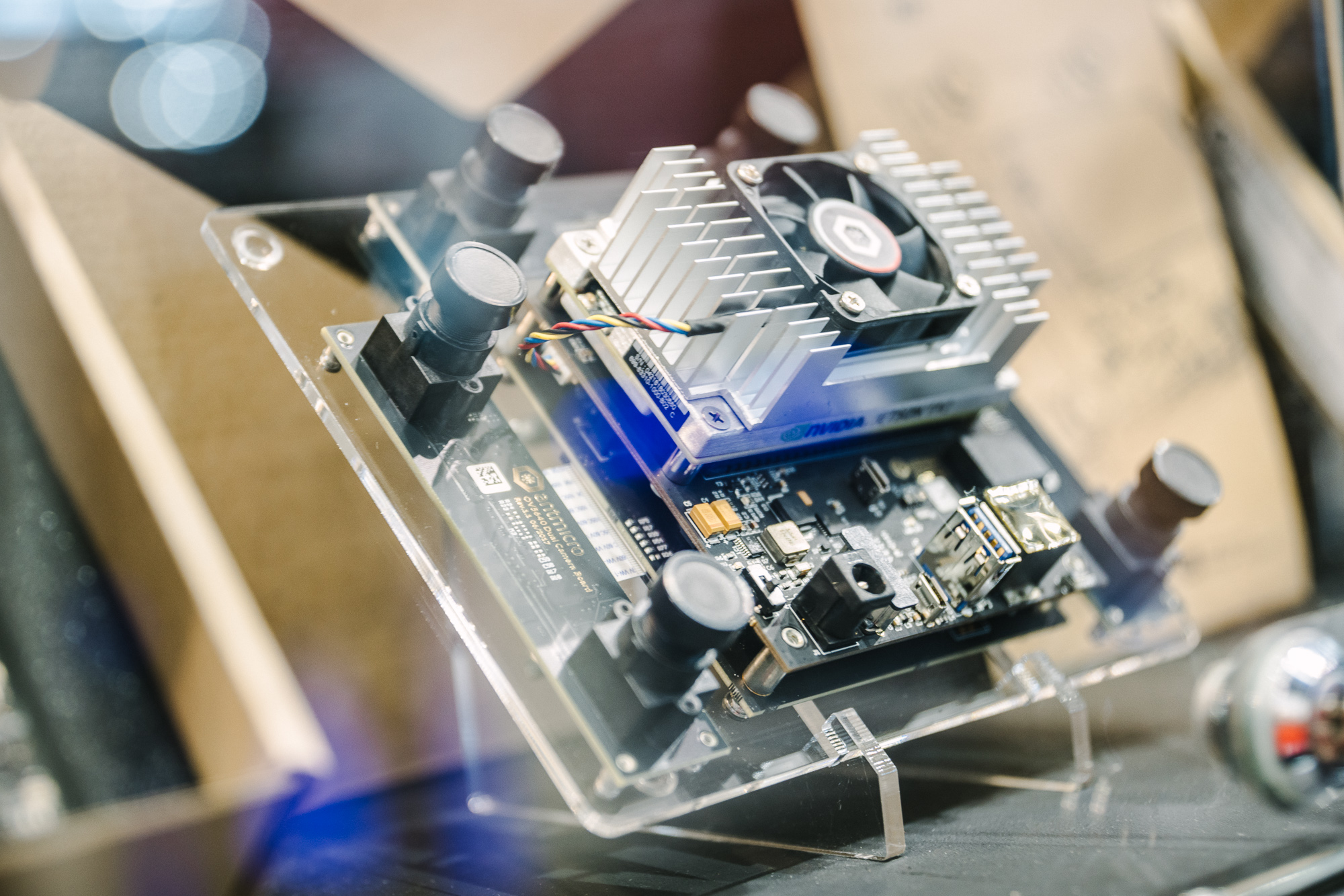

Another example of our scalable design approach featured here will be a high-speed industrial stereovision camera platform for AI-supported 3D vision processing in real time. Comprising a stereovision camera module based on Antmicro’s UltraScale+ Processing Module, and our own TX2 Deep Learning baseboard for object tracking, detection and classification, the device is a modular concept that can be easily expanded or adapted to larger industrial systems, such as the X-MINE smart mining project we’ve been participating in.

While many of our projects focus on high-end, multi-core and heterogeneous processing systems, a lot of use cases may require AI algorithms to be run on small and resource-constrained devices. Antmicro’s Renode and Google’s TensorFlow Lite teams have been collaborating to use the Renode simulator for demonstration and testing of Google’s Machine Learning framework, and to bring it to new frontiers with real industrial use cases. After porting the framework to RISC-V, we have now enabled TF Lite micro to work well with Zephyr on LiteX/VexRiscv.

What helps us achieve high quality and offer effective scalability in our edge AI solutions is our open Renode simulation framework, and the practices of Continuous Integration / Continuous Development available in the Renode Cloud Environment, which allow us to perform reproducible builds, mitigate the black box effect and ensure traceability of the software stack. At this year’s show, see how we use our custom CI setups deployed in RCE with a broad list of demonstrator tests. Renode’s capability to co-simulate SoC and FPGA components with Verilator, or to connect blocks running in physical eFPGAs to enable a “divide-and-conquer” HW/SW co-design philosophy, resonates perfectly with the hybrid SoC FPGA nature of the EOS S3 platform we’ll be showing as well (read more below).

Open source software/BSP and hardware development

Our presence at EW 2020 will naturally revolve around non-proprietary software and hardware as we strongly believe that open source is changing the world of tech for the better by making useful tools accessible, transparent and easily modifiable. Also, the lack of vendor lock-in gives us and our customers the freedom to develop solutions based on the best technologies available.

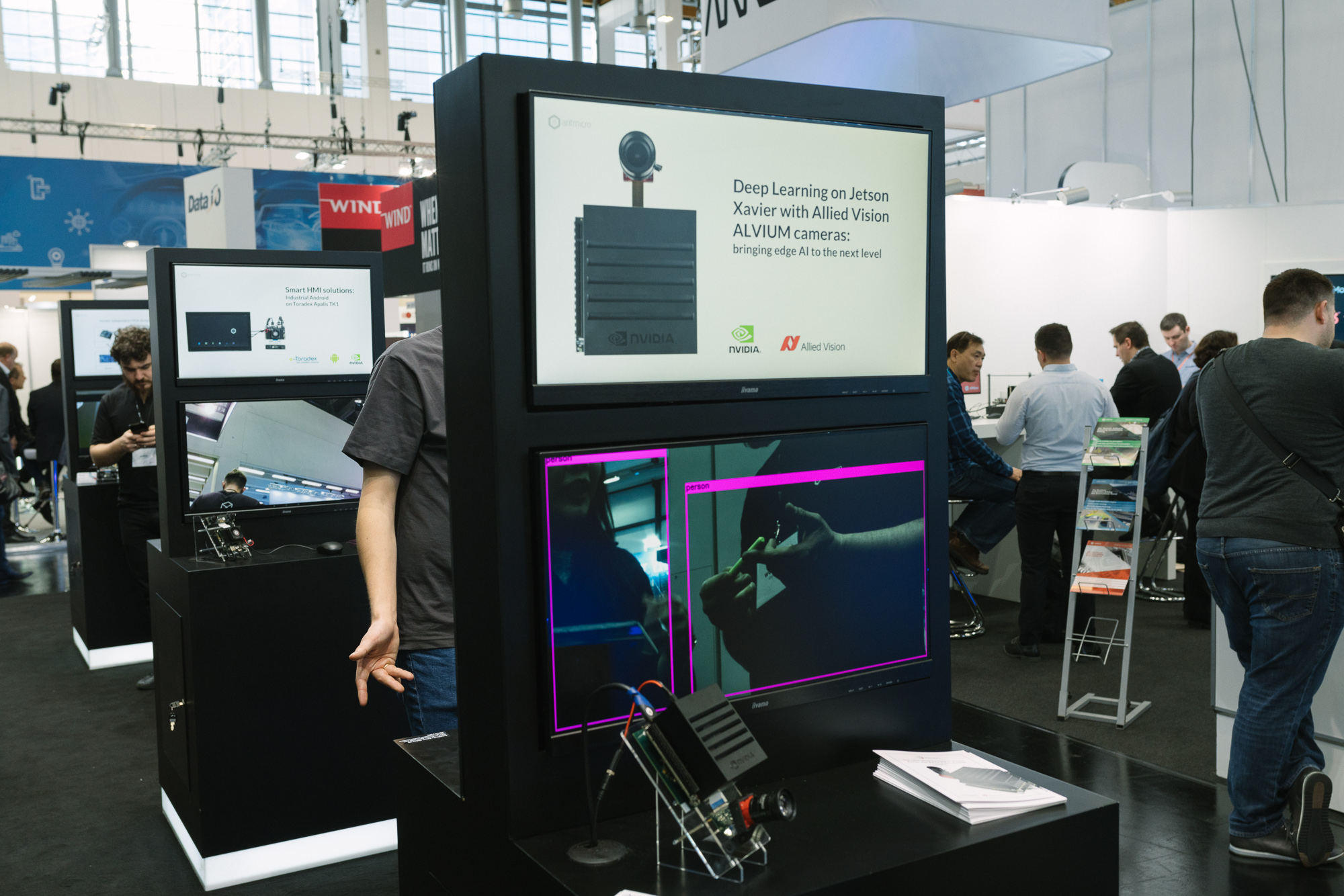

Over the last year Antmicro collaborated with Allied Vision to provide software support for their innovative Alvium camera series. Having a partner that shares our vision of open technologies, we have already released drivers for the Jetson TX2 which support all of the cameras so far in the Alvium series and will ultimately cover future ones, too. See them at our booth running on Antmicro’s TX2/TX2i Deep Learning Kit – an NVIDIA Jetson-powered platform that is able to drive up to 6 MIPI CSI-2 cameras. A beta release for early Jetson Nano support is also available for those who cannot wait for the final version!

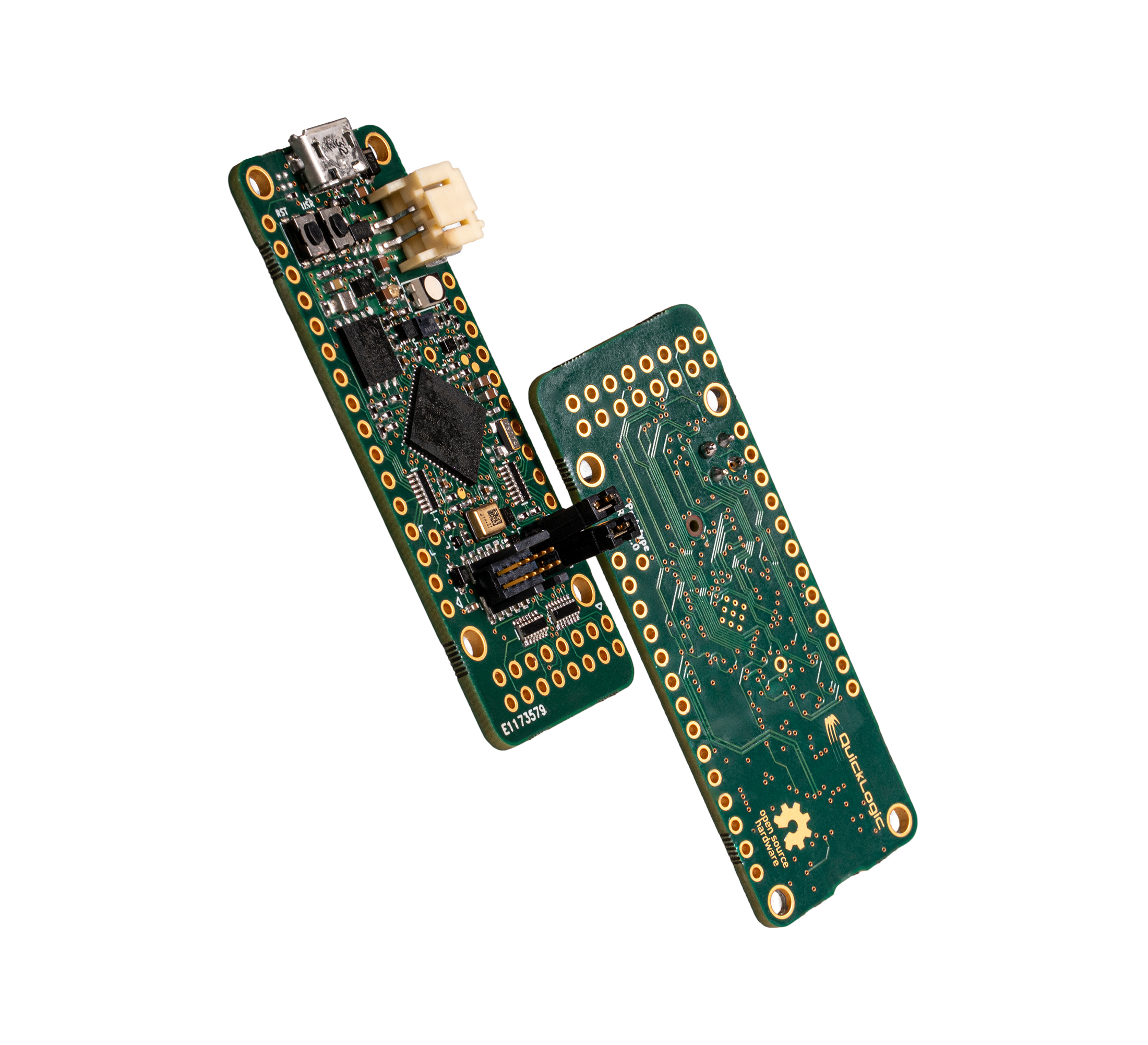

We’re happy to see more of our customers make use of our open source-oriented vision and the new design methodologies we promote. Antmicro has recently added a Zephyr port to QuickLogic’s Quick Feather development board for the EOS S3 and the soon-to-be-released addition to the open source Tomu tiny USB family of devices, nicknamed Qomu – both of which will be showcased by Antmicro at EW 2020. The EOS S3 is now also supported in Antmicro’s Renode open source simulation framework for rapid prototyping, development and testing of multi-node systems, offering a more efficient hardware/software co-design approach to Zephyr developers. You can see this support, as well as other Renode demos, in action at our booth.

We are very excited to be coming back to Embedded World with a range of captivating technology demonstrators. The Antmicro team can’t wait to meet you, so visit us in Hall 4A-621 or schedule a meeting beforehand at contact@antmicro.com, and let’s talk tech!