Written by Mike Szczys, Zephyr Ambassador and Developer Relations Engineer at Golioth

This blog originally ran on the Golioth website. For more content like this, click here.

ZephyrRTOS is built to let you do multiple things at the same time using a single (or limited number) of processing cores. To be fair, you’re not doing things at the same time; the RTOS shares processing time across all of the tasks, with a priority system for the more important ones.

The trick with an RTOS is to design your applications so that they utilize the real-time-ness and you don’t miss reacting to real-time events like receiving data from a network, taking sensor readings, or reacting to user input.

In this post, we’ll discuss the difference between Zephyr threads and work queues and show you why you might want to use one versus the other. We’ll also discuss how to use message queues to pass around data between running processes.

Zephyr Threads

A thread is a set of commands to be executed by the CPU. The while(1) loop that runs inside of the main function of your application is a thread. But Zephyr allows you to create multiple threads. Each thread operates independently, with Zephyr’s built-in scheduler deciding when to run each thread.

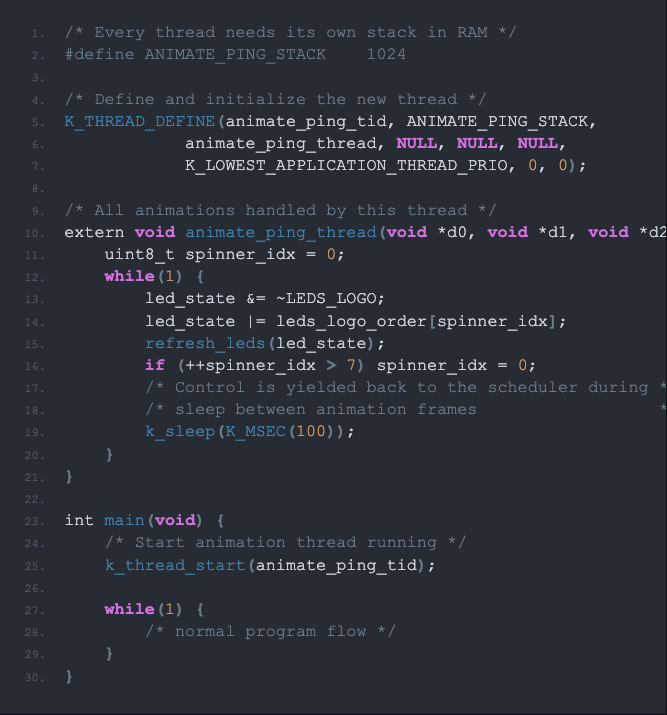

For example, the Golioth Blue Demo run animations on the LEDs to indicate what mode the device was in. Instead of trying to get the loop in the main thread to update the lights on a tight schedule, we use a thread to run the animations. The main thread of the program deals with flow of the things happening on the device, and it can suspend/resume the animation thread as necessary.

I like to think of threads as tasks that need keep running, things that don’t return like a discrete function would. Your thread should have a while(1) and can call other functions just like you would in your main loop. It is up to you to yield processor control back to the scheduler so that it may run other threads. This is pretty easy, just call any of the k_sleep() functions or k_yield().

The example shown here runs an animation when an IoT device is trying to connect to a network. When it begins running, the LEDs will update every 100 milliseconds. Because the loop sleeps in between frames, control is given back to the scheduler or other tasks. Elsewhere in the program, we call k_thread_suspend(animate_ping_tid) and k_thread_resume(animate_ping_tid) to stop and start the animation.

Just be careful that you don’t have multiple threads trying to access the same resource at the same time (protect those resources with a mutex).

Zephyr Work Queues

A Zephyr workqueue is a thread with extra features bolted on. It also requires less setup and management than threads.

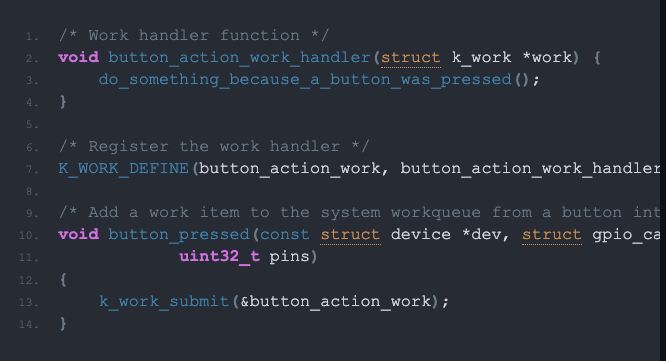

The work queue has a built-in buffer to store pending work (functions you want to run). Each item you add will be executed in the order you submitted it, like a “to-do list” for your processor. This is a perfect place for something that you want to run once and return from, but don’t want to do it inside of an interrupt service routine.

The “work” you submit to a work queue is a function. So you’re telling the work queue “run this function, then run this other function, then run this other…” you get the idea. Just set it and forget it–the Zephyr scheduler will run take care of popping work out of the queue and running it until the queue is empty. Many of the sensor readings we do in our demos are handled through work queues.

The work queue expects a specific type of function usually referred to as a “work handler”. You don’t actually give the handler any information, you just tell your work queue you want to add your handler to the list of pending work.

This code snippet demonstrates using a work queue when a button is pressed. You want to spend as little time as possible in the button interrupt function. All this handler does is queue up a button action function that will be run by the scheduler, sometime after the interrupt service routine ends.

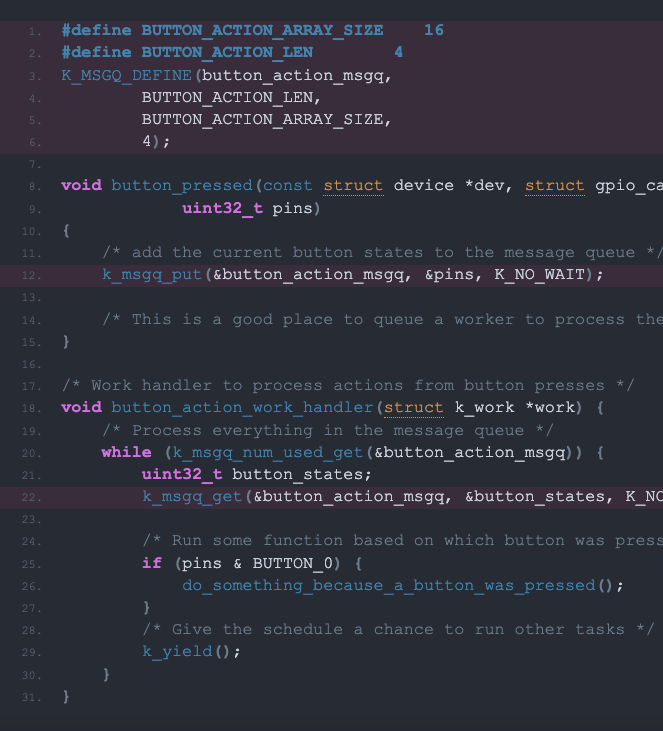

The challenge here is how to get data to the worker since we can’t pass any parameters. How do you know which button was pressed? One approach is to place your work item inside of a struct along with the data you want to pass (button number, etc.). Then in the handler you can use CONTAINER_OF() to access the data in the structure. This is beyond the scope of today’s post, so let’s talk about a bit simpler approach we often take: using a message queue.

Zephyr Message Queues

A Zephyr Message Queue is a collection of fixed-sized data that is safe to access from multiple threads. You decide what the data is (a variable, a struct, a pointer, etc.) and how many of those objects the queue can contain (limited by your available RAM). Zephyr handles all of the logic necessary for adding to and removing from the queue.

Continuing with our button analogy, the example above uses a message queue to record the button states for processing after the interrupt service routine returns. I’ve highlighted the lines that are crucial to the message queue system. Notice that the work handler uses a while loop to check if the message queue is empty. This is a nice pattern for processing all of the queued message. I’ve used k_yield() between loops to give the scheduler a chance to run other tasks.

Message queues are a spectacular tool for IoT applications. Golioth has used Message Queues to cache GPS readings while the cell modem is turned off on our Orange Demo. Periodically, the modem will turn on, send all of the readings from the queue to the Golioth servers, then turn off the radio once again to save power.

Threads, Workers, and Queues

We often focus on the “ecosystem” aspect of Zephyr, because we like that so many silicon vendors contribute to the code base. But it’s important to remember that Zephyr is a Real Time Operating System and has a fully featured scheduler. We’ve only just scraped the surface of what you can do with it. Hopefully the above explanations helped you to begin thinking in these patterns, understanding how to divide up the application work, and how to pass data between different parts of your code.

If you have any questions or comments, please connect with the Zephyr community on the Zephyr Discord Channel.