Written by Roy Jamil, Training Engineer at Ac6

Introduction

Welcome to part 3 of our series on multithreading problems. In the previous articles (part 1 and part 2), we explored the producer-consumer problem and discussed various solutions to tackle it. If you haven’t had a chance to read it yet, we highly recommend checking it out for a comprehensive understanding.

In this part, we explore the readers-writer(s) problem, which occurs when multiple threads require concurrent access to a shared resource. We will delve into the complexities of this challenge and present effective strategies to address it. Our focus will be on designing synchronization mechanisms that guarantee exclusive access for writers while allowing concurrent access for readers.

In this series of 4 blogs, we’ll discuss some of the most common problems that can arise when working with multithreaded systems, and we’ll provide practical solutions for solving these problems:

From the producer-consumer problem, where multiple threads share a common buffer for storing and retrieving data, to the readers-writer problem, where multiple reader threads need to access a shared resource concurrently.

Used kernel features

To ensure a solid foundation for understanding the Readers-writer solution, we will begin by presenting the mutex mechanism.

A mutex, short for mutual exclusion, is used for resource management that ensures exclusive access to a shared resource. It acts as a lock, allowing only one thread at a time to execute a critical section of code. In Zephyr, a mutex typically includes a counter to track the number of times it has been locked and unlocked. However, in this example, we are using the binary form of a mutex, which means it has only two states: locked or unlocked. When a thread acquires the mutex, it locks it, preventing other threads from accessing the protected resource, it maintains information about the current holder of the lock, which helps in identifying the thread that currently has exclusive access to the shared resource. Only when the thread releases the mutex it becomes unlocked, allowing another thread to acquire and use it.

We are also using in this example semaphores, to learn more about them and their role in synchronization, please check out Part 1 of this series. In that article, we discussed the producer-consumer problem and explored different solutions that involve semaphores.

Readers-writer(s) problem

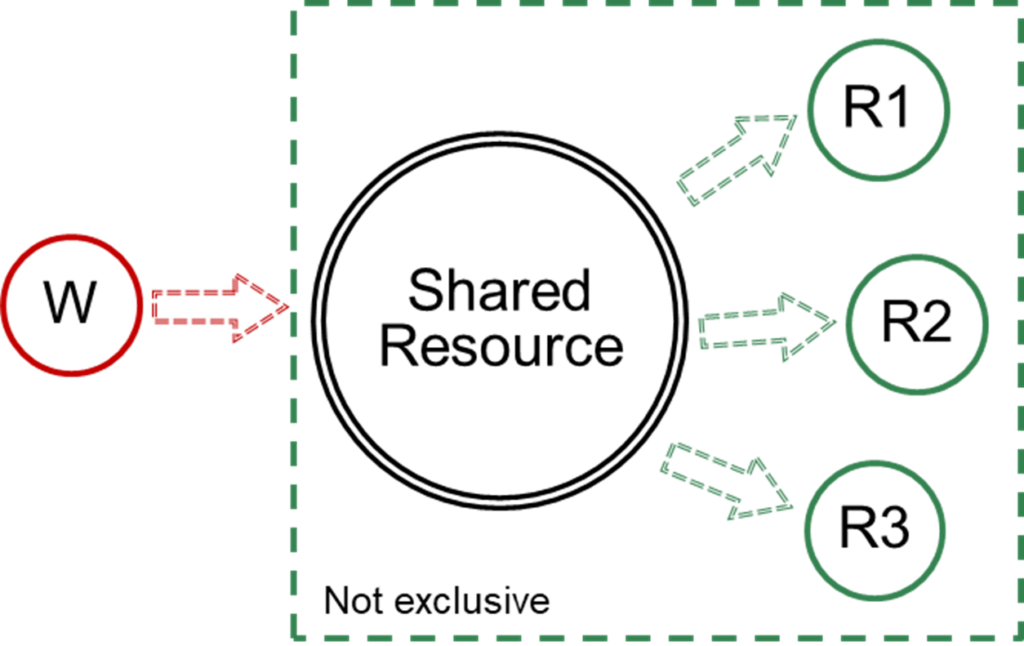

The readers-writer(s) problem is a classic example of a problem that can arise in real-time systems when multiple threads (readers and writers) need to access a shared resource. The problem is to ensure that multiple readers can access the resource concurrently, but writers have exclusive access to the resource while they are updating it.

In this problem, a reader thread needs to be able to access the shared resource to read data from it, while a writer thread needs to be able to access the shared resource to update the data. The challenge is to design a synchronization mechanism that allows multiple reader threads to access the resource concurrently, but gives writers exclusive access to the resource while they are updating it.

When using only a mutex to solve the readers-writer(s) problem, a reader thread must acquire the mutex before accessing the resource and release the mutex when it is done. This means that if multiple reader threads try to access the resource simultaneously, only one of them will be able to acquire the mutex and access the resource, while the others will have to wait until the first reader releases the mutex before they can acquire it and access the resource. This can lead to a lot of unnecessary waiting and can reduce the overall performance of the system. To avoid this issue, we must use a different synchronization mechanism, such as a semaphore.

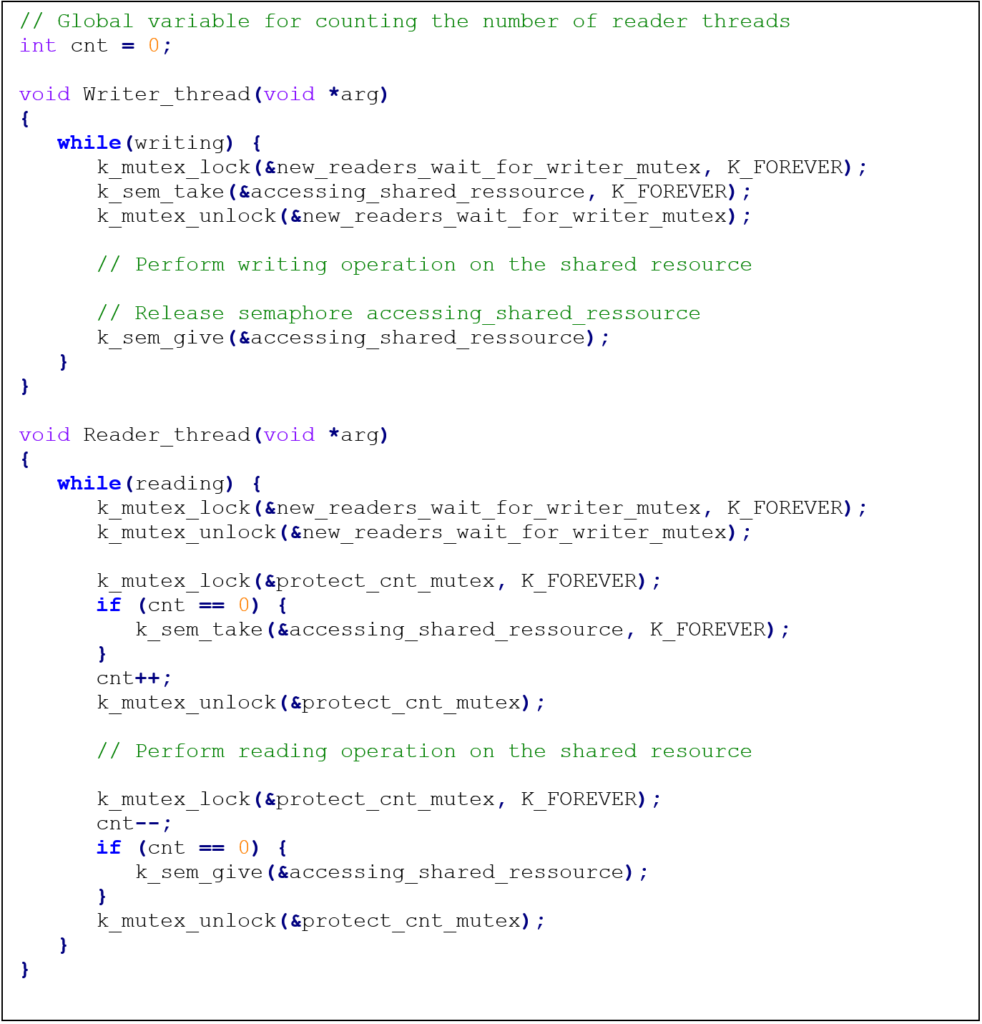

This implementation uses a combination of mutexes and semaphores to solve the readers-writer problem. It allows multiple reader threads to access the shared resource concurrently, while giving writers exclusive access to the resource when they need to update it.

The semaphore “accessing_shared_ressource” is used to synchronize access to the shared resource by reader and writer threads. It is initialized with a count of 1, which means that only one thread (either a reader or a writer) can acquire it at a time. When a writer thread wants to access the resource, it acquires the semaphore and releases it when it is finished. When a reader thread wants to access the resource, it first checks the value of the global variable “cnt”. If “cnt” is 0, it means that no other reader threads are currently accessing the resource, so the thread acquires the semaphore and increments “cnt” to indicate that it is now reading the resource. If “cnt” is not 0, it means that other reader threads are already reading the resource, so the thread does not need to acquire the semaphore and can start reading immediately.

The mutex “protect_cnt_mutex” is used to protect the global variable “cnt” from being accessed concurrently by multiple reader threads.

The mutex “new_readers_wait_for_writer_mutex” is used to ensure that new reader threads must wait for any currently waiting writer thread to finish writing before they can start reading. This prevents the writer thread from being starved by the readers.

In case of multiple writer threads, locking and directly unlocking the mutex “protect_cnt_mutex” can be added at the beginning of the writer thread, this is needed to make sure that the writers do not starve the readers.

Trace and debug:

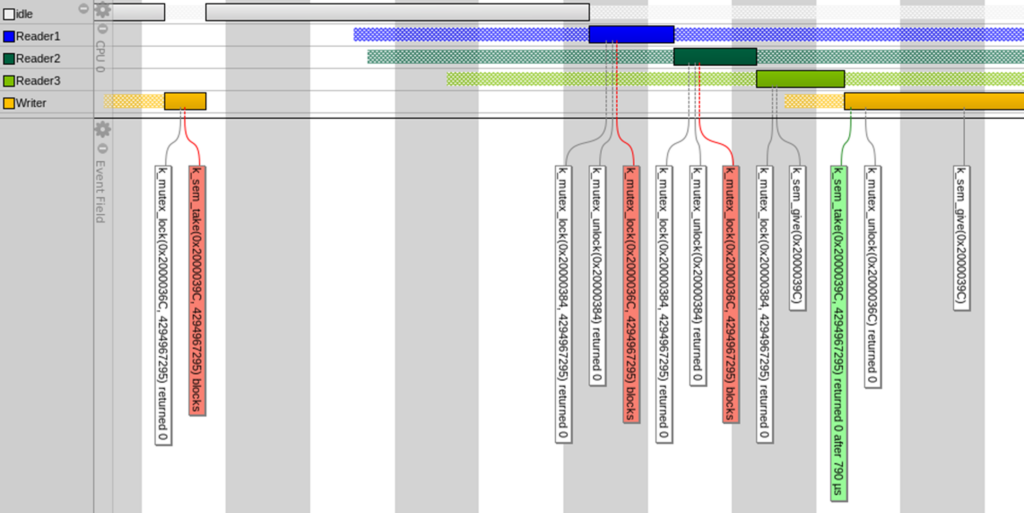

Tracealyzer can be used to help in understanding the behaviour of the system by providing a visual representation of how the different threads are interacting with each other over time. It can help to identify and diagnose problems such as deadlocks, priority inversions, and other synchronization issues by showing the order of execution and the flow of control between the different threads and tasks.

In this trace you can see that the writer starts executing, it takes the “new_readers_wait_for_writer_mutex” mutex, then it blocks on taking the semaphore because of the readers that are still using the resource. The readers will resume executing, then will block at the beginning of the next round on the “new_readers_wait_for_writer_mutex”.

When all readers finish their current reading round, they are all blocked at the beginning of the next round, except the last reader which will give the semaphore that will signal to the writer that it can now take it, then it unlocks the mutex, and starts writing. When it finishes writing, it will give the semaphore so the readers can read the new value.

Conclusion

In conclusion, the readers-writer(s) problem is another common problem that occurs when multiple threads (readers and writers) need to access a shared resource. We showed a solution that uses mutexes and semaphores.

It is important to note that the solutions provided are just examples of how these problems can be solved. There are many other ways to solve these problems, like lock-free mechanisms, and the best solution will depend on the specific requirements and constraints of your application. It is important to carefully consider the trade-offs between different approaches and choose the one that best meets the needs of your application.

In the part 4 of this blog series, we will show the solution to solve the readers-writer(s) problem using the POSIX implementation that is available in Zephyr based on the rwlock.

If you’re interested in learning more about synchronization and communication mechanisms available in Zephyr, be sure to follow our full Zephyr training course. This course goes in-depth on the blocking and lock-free mechanisms available in Zephyr and covers a wide range of other multithreading problems and solutions.

Our course is designed for developers who want to learn how to write efficient and reliable multithreaded applications using the Zephyr RTOS. Whether you’re new to real-time systems or an experienced developer, this course will provide you with the knowledge and skills you need to develop high-performance multithreaded applications in Zephyr.

For more information, click here to see the course outline.